Cybersecurity is drowning in today’s ocean of security-relevant data, but a surprising number of security teams have yet to integrate AI technology into their operations centers.

By Mike Armistead, co-founder Respond

But we have already reached the tipping point where it is not only possible, but required, to efficiently mix workloads between and machine. The ideal partnership is one where the machines are tasked with performing certain tasks deeply, consistently and autonomously, while people bring creativity, imagination and cunning to the table.

It’s popular with the press to attack the emerging “Era of Artificial Intelligence.” Warnings from Elon Musk and Stephen Hawking about how robots will steal jobs and machines and make bad choices for us humans espouse the downside of A.I. Meanwhile, there were an estimated 37,461 US fatalities in 2016 due to automobile accidents according to the Nation Highway Traffic Safety Administration, the media are quick to headline the 4 fatalities involving autonomous or driver assisted vehicles that have happened since 2016.

Yet, demand has showed us that consumers and businesses obviously see benefits to A.I.-related services as they consistently adopt virtual assistants, fly on AI-flown airplanes that pilot seamlessly in fog, or use smart software to organize piles of information and photos.

To figure out where the cybersecurity really stands in this balance, let’s dismiss the notion that we are close to being able to deploy true, sentient A.I. that can think and act on its own, a la SkyNet/Terminator. While it is right for Musk and Hawking to raise the warning as the technology quickly evolves – for there are ethical issues that accompany our advances - in practical terms we are far from being there.

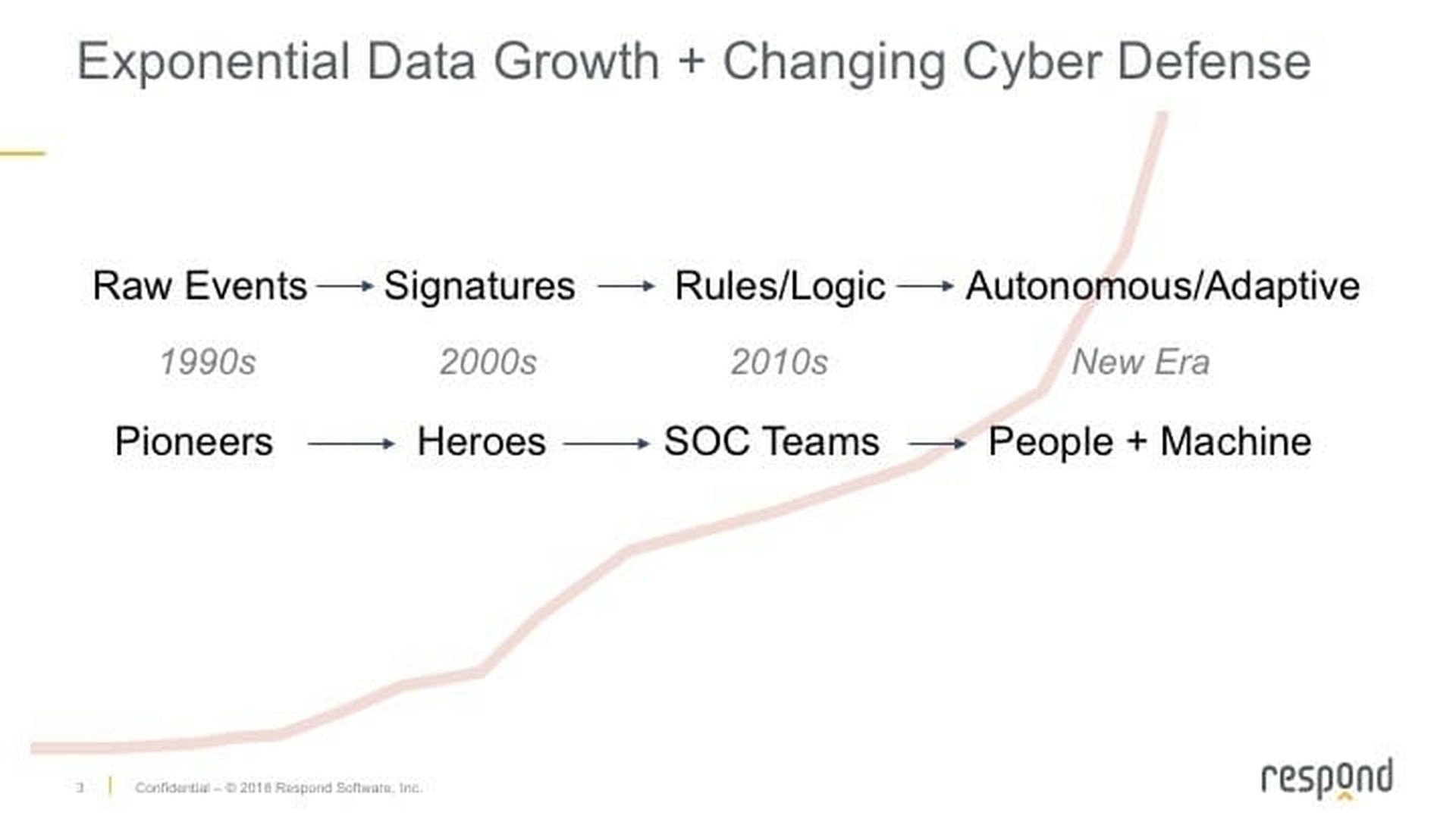

Looking at the history of how companies have approached security operations gives us one clue. The rise of the connected and digital enterprise forced a progressive cyber defense to be enacted. It started in the 1990’s and 2000’s with individuals handling the monitoring and analysis of security telemetry and the response to threats. As businesses became more connected, attack surfaces grew…and so did the sophistication of the adversaries. Now teams were needed to handle the load.

Unfortunately, a gap between the number of capable, skilled security personnel and the security-relevant data that needed to be analyzed became relevant and started to expand. In the last decade, this gap widened as exponential data growth swamped teams of security professionals. And because most every security operations center was designed with a person at its center – a design that made sense when the data could be processed with that team’s capacity – people have now become the choke-point for effective security.

So today’s question isn’t one of whether we should or shouldn’t adopt A.I. technologies in our security operations, it is how we can marry humans with machines to best reduce or eliminate a gap that is keeping us from performing effective cyber defense.

Generally, let machines do what machines do best and people do what people do best.

Machines that are properly “trained” (not a trivial matter, so ask the vendor/provider about it) are excellent at processing their task consistently. Over and over. Even at 2 a.m. during a holiday week. They don’t get tired or distracted, no matter how boring the work may be. Given this, the best tasks for machines in cybersecurity tend to be those that monitor and process high volumes of data in well-known scenarios. Especially at times and days where people are either not at their best or scarce.

Most A.I. and ML-powered products in the market today focus on reducing the volume of data through “smart filters” and anomaly detection in a store and query mode. This is certainly helpful. Be mindful, however, of providing “yet another alert” to the security team. Machines should reduce the workload, not add to it. Anomaly detection – even based on A.I. – can have high false positive rates that lead to reinvestigations.

The emerging field of modern expert systems and automated decision analysis is less about reducing the volume of data through filters and anomaly detection and more about emulating and automating the decision-making process of skilled analysts. The benefit of this technology is that it is meant to directly replace the tasks that are hard for humans - considering 20, 30 or 100 different facts consistently across data - to determine a likelihood that something is malicious and worthy of a response. Once again, the best place for this technology is in well-known, human challenging scenarios.

An obvious place for machines are domains that require scale and speed. People are just not good at processing volumes of data over time. New architectures that go beyond database queries into streaming analysis avoid massive costs of storage. Streaming architectures enable process on the fly, similar to humans, although at speeds and scale that are orders of magnitude beyond our own capabilities.

People are good at the types of investigations that require ingenuity. People chase hunches, experiment with ideas and follow threads in non-standard ways. This is the realm of threat hunting and proactive investigations or setting up deception grids. Machines can help enable this by taking on some of the monitoring and analysis tasks, liberating the human from a monitoring console so they have the bandwidth to tackle these types of proactive security measures. Now, we just need to marry them the right way.