Cybersecurity analytics for large-scale protection

Most modern security analytic methods and tools are best suited to larger infrastructure with large data sets. That is, it is unlikely that one would use real-time, telemetry-based monitoring with 24/7 coverage for a personal computer, unless that personal computer was connected to a larger system or contained highly sensitive information. In contrast, however, it would likely be quite important to apply such telemetry-based analysis capabilities to large-scale national critical infrastructure protection.

What type of security telemetry is required to protect infrastructure?

Telemetry involves useful data collected by remote sensors for the purpose of improved visibility, insight, and monitoring of a target environment. Engineering issues related to the integration of telemetry into a security environment include: where to put sensors, how to securely pull the telemetry to a collection point, and how to tune the sensors to collect the right type and amount of data, among other things. These decisions must be addressed before any telemetry-based system can be effective in improving a particular organization’s cybersecurity.

The particular security telemetry required to protect large-scale systems will also vary from one implementation to another. For instance, pure information technology (IT) ecosystems will typically generate different log information and event data than an organization that combines IT systems with operational technology (OT), perhaps in the context of industrial controls or factory automation. Many OT systems, for example, use unique protocols on top of the traditional protocol suite that need to be evaluated for potential risks.

Nevertheless, requirements can be developed for the specific attributes required in any telemetry system being used to protect infrastructure from attacks. The security telemetry attributes, listed below for example, will be best suited to large-scale systems, but can also be adapted and used for smaller systems:

- Relevancy – Telemetry for cybersecurity must be relevant to the protection goal. Event logs for systems not considered important or activity records for networks that do not support mission-critical applications might therefore not be relevant to the central protection task.

- Accuracy – The measurements inherent in collected data must accurately portray the particular system attribute being analyzed. If such measurements are crudely estimated, for example, bad decisions could result from the interpretation of such measurements.

- Coverage – Telemetry for large-scale infrastructure requires assessment of coverage scope of the telemetry being acquired to ensure that the scope of the data being obtained is broad enough to support analytic efforts.

- Detail – The data collected to support infrastructure protection must also include sufficient detail to expose relevant threat issues. Event logs, for example, that only show the beginning and end of particular sessions without more detail are likely to be of limited use to security teams in assessing a given threat or attack.

- Attribution – Knowing the provenance of collected data can be helpful to establish context. In cases where attribution has privacy implications, caution must be exercised to ensure that such efforts are conducted consistent with the relevant legal and policy frameworks.

In the early days of collection for analytics, the general view was the more data, the better. Most security operations center (SOC) teams today would likely contest this view, arguing instead that the right data from key sources is most important. This approach to data collection minimizes unnecessary work and improves security process flow. That said, with the advent of large-scale data analysis platforms, increased ability to ingest and sort out large amounts of data at scale is improving outcomes for SOC analysts and leaders by taking in more data and making it relevant to decision-makers.

How can security telemetry be aggregated into useful information?

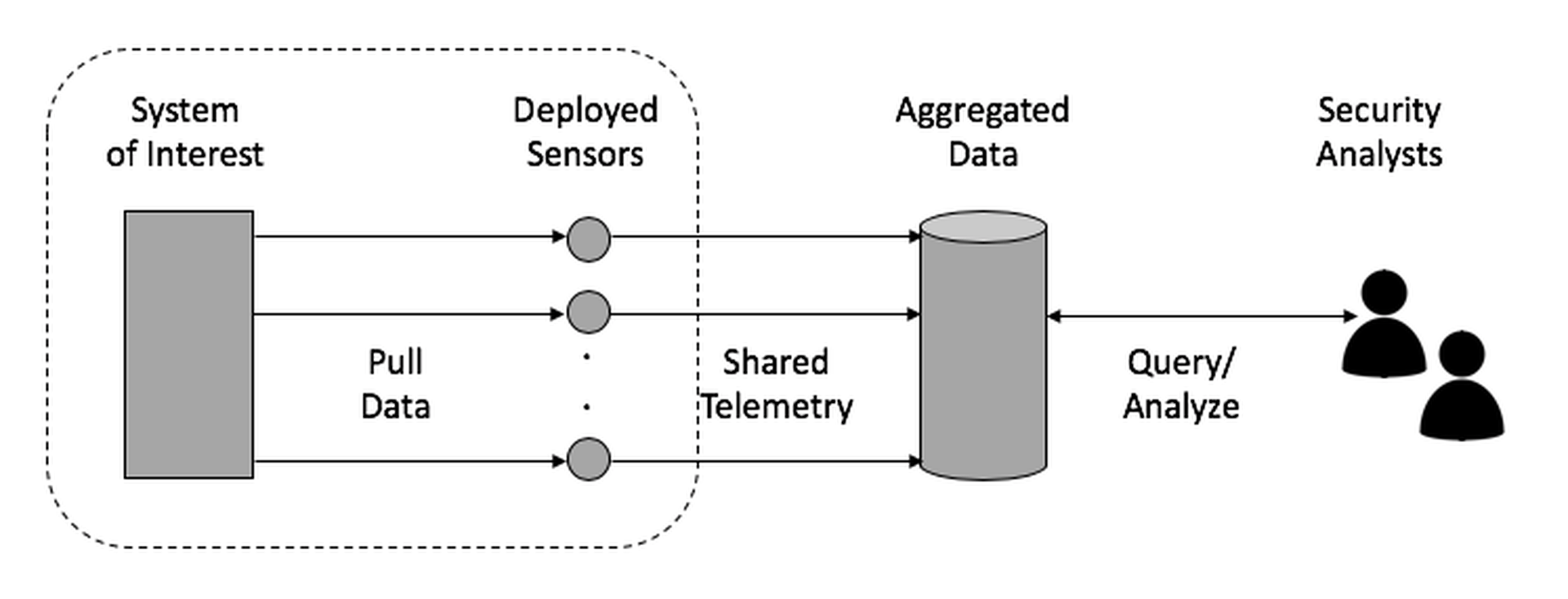

The most familiar aggregation method for collected data involves deploying sensors to pull data from relevant systems. The sensors are tasked to create and share telemetry to identify local conditions of interest. The tasking is often pre-programmed, but can be adjusted from a management center. Aggregated data is shared securely with an analysis function in a backend for query and alerting. This summarized data is then provided in a user-friendly form to analysts to conduct further assessment and triage, and to take action, where appropriate. The set-up shown below is a typical arrangement for telemetry processing.

Figure 3-1. Traditional Telemetry Collection and Aggregation Method

The system of interest and the deployed sensors are often logically or physically adjacent, whereas the aggregation and analysis function is often done remotely. This is not a rule, certainly – and situations emerge where the relevant data or systems are not co-located with sensors—but typically these situations are resolved by routing relevant data to the sensor through the network. This cadence of pulling, sharing, and analyzing data through sensors to an analytic backend, and then to an analyst in a SOC is common characteristic of modern telemetry-based infrastructure security protection.

It is worth highlighting that such detailed monitoring would typically be unnecessary for a small system. Large-scale Infrastructures, in contrast, are much harder to understand, which is why pulling data from various systems and networks and combining and analyzing it at scale allows for a better (if approximated) understanding of operation of such large-scale systems. The eminent computer scientist Edsger Dijkstra once captured the important difference between large and small systems with the following comment about an approach taken his predecessor John Von Neumann:

E. Dijkstra

“For simple mechanisms, it is often easier to describe how they work than what they do, while for more complicated mechanisms, it is usually the other way around.”

What types of algorithmic strategies are used to protect infrastructure in real-time?

Algorithms relied upon to identify relevant issues from collected data are often designed to predict or detect security-related conditions. Such prediction comes in the form of so-called indications and warnings (I&W) that are observed before some undesired consequence can occur. Obviously, I&W detection is preferred to observing an attack that has already started or even been completed, because in these cases, the damage might have already taken place, making it harder to mitigate.

The algorithmic goal is correlation, which involves automated comparison of different data sets to identify connections, patterns, or other relationships that can connect events. The current state of the art in establishing correlations involves security analysts curating the overall process. The term threat hunting has emerging to describe the general process of experts employing technology capabilities to draw fundamental security conclusions.

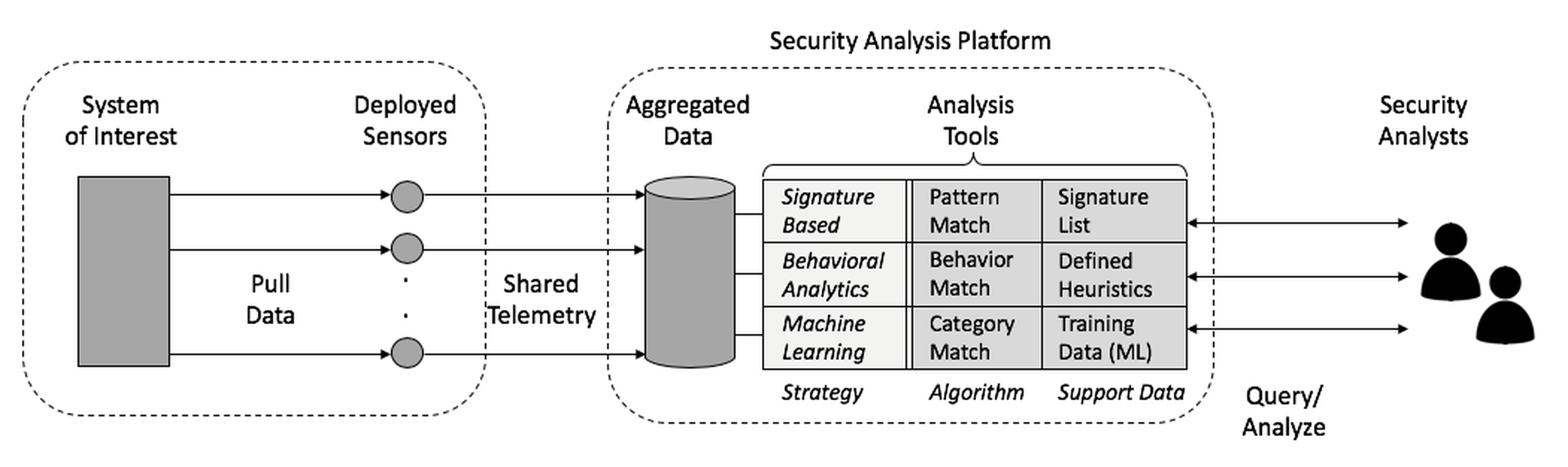

Three strategies exist in the development of algorithms to support correlation between collected data and potential attack indications in a target infrastructure:

- Signature Matching – This involves comparing known patterns with observed activity. Signature patterns are often lists of suspicious Internet Protocol (IP) addresses or domains to be identified in activity logs. Signatures can also include the type, size, location, hash values, and names of files used by an attacker, or even representations of attack steps. If good signatures are known, as with anti-virus software, then signature matching can be powerful. If relevant patterns are unknown or can be easily side-stepped or worked around, then the technique does not work.

- Behavioral Analytics – This approach involves dynamic comparison of behavior patterns with observed activity. Typical activity being reviewed is whether an application is trying to establish an outside network connection or invoke some unusual operation. Behavioral analysis can be more complex than signature-based review, but can also be significantly more powerful by detecting unknown threat vectors or attack techniques modified to avoid signatures. The strength of this approach is that signatures need not be known, so detecting zero-day based attacks becomes relatively more tractable. One of the weaknesses of such a system is that immature behavioral profiles can result in high false positive rates.

- Machine Learning – In this approach, considered a branch of artificial intelligence, machine learning tools use powerful algorithms to scan input and make determinations based on training examples or abstractions from data in order to establish a categorization of the analyzed data. This approach to security threat detection is similar to how machine learning can be used to visualize and categorize objects. The strength of such systems is their potential to recognize unknown attacks based on the learning process. The weakness is that this method requires an enormous amount of data to be stored and analyzed over time, whether with a data set that can be replayed for machine learning or ingested live for deep learning.

Figure 3-2. Combining and Integrating Correlation Algorithm Strategies

As depicted in Figure 3-2, platforms can combine and integrate all three strategies into one effective security analysis platform for infrastructure protection. Nothing precludes the pre-processing of telemetry for known signatures, especially in cases where a rich source of intelligence is available. Similarly, if some obvious behavioral pattern exposes a malicious intent, then it is reasonable to deploy this method in advance of more powerful machine learning analysis.

Is it possible for organizations to work together to reduce risk?

In general, organizations within the same industry sector or across key critical sectors will have comparable threat issues. This suggests a mutual interest in working together to share data to improve identification of both known and emerging threats. A reasonable heuristic for such processing is that having more relevant data improves establishment of data relationships. So, it should be a goal for any team protecting critical infrastructure to try to work with peer groups in the same industry.

There are challenges, however, for organizations to cooperate and share data to reduce their mutual cybersecurity risk:

- Competition – The competitive forces between organizations might cause them to question whether cooperation is in their mutual best interests. Some industries will naturally gravitate toward coordination (e.g., airlines cooperating on safety issues). But other industries might include participants who benefit when a competitor is breached, thereby potentially reducing the likelihood of cooperation.

- Attribution – If shared information can be easily attributed to a reporting source, then cases may emerge where this information can be used to embarrass the source, or even influence customer behavior. Anonymity options are thus important in any information sharing initiative designed to support cooperative cyber protection.

- Liability/Regulation – This challenge emerges where the legal implications of an incident might be unknown or under consideration by regulators or policy makers. Obviously, highly regulated organizations may have certain reporting obligations, but in the earliest stages of an incident, such reporting obligation might not be known and the liability implications of sharing or reporting may be unclear, creating a tendency to hold off on sharing until the legal and liability posture of an incident are more fully understood.

None of these challenges are insurmountable, but all require careful attention if the goal is to create a cooperative protection solution for multiple infrastructure organizations. In the next section of this report, we will examine how a typical commercial platform might serve the technical needs of a SOC team for correlating security telemetry while also addressing the practical operational challenges for reporting organizations.

Part 4 will appear on Aug. 5.