At least eight Canadian banks were recently affected by a phishing operation enabled by automated, Telegram-based bot services that contacted would-be victims via phone and coaxed information out of them that would facilitate an account takeover.

Bank customers whose accounts are protected by one-time passwords may not be as secure as they think they are, as this attack methodology effectively bypasses SMS-based OTPs through social engineering and impersonation of financial institutions. Essentially, the bot tricks users into giving away their account credentials, phone numbers and other details after they receive what appears to be an official communication from their bank.

Researchers at Intel471 who reported on the operation this week say that since June 2021 they’ve seen a notable uptick on these Telegram bot services available for a fee on the dark web. Besides banks, other bot targets include social media platforms, payment services/apps, investment and crypto platforms and wireless carriers.

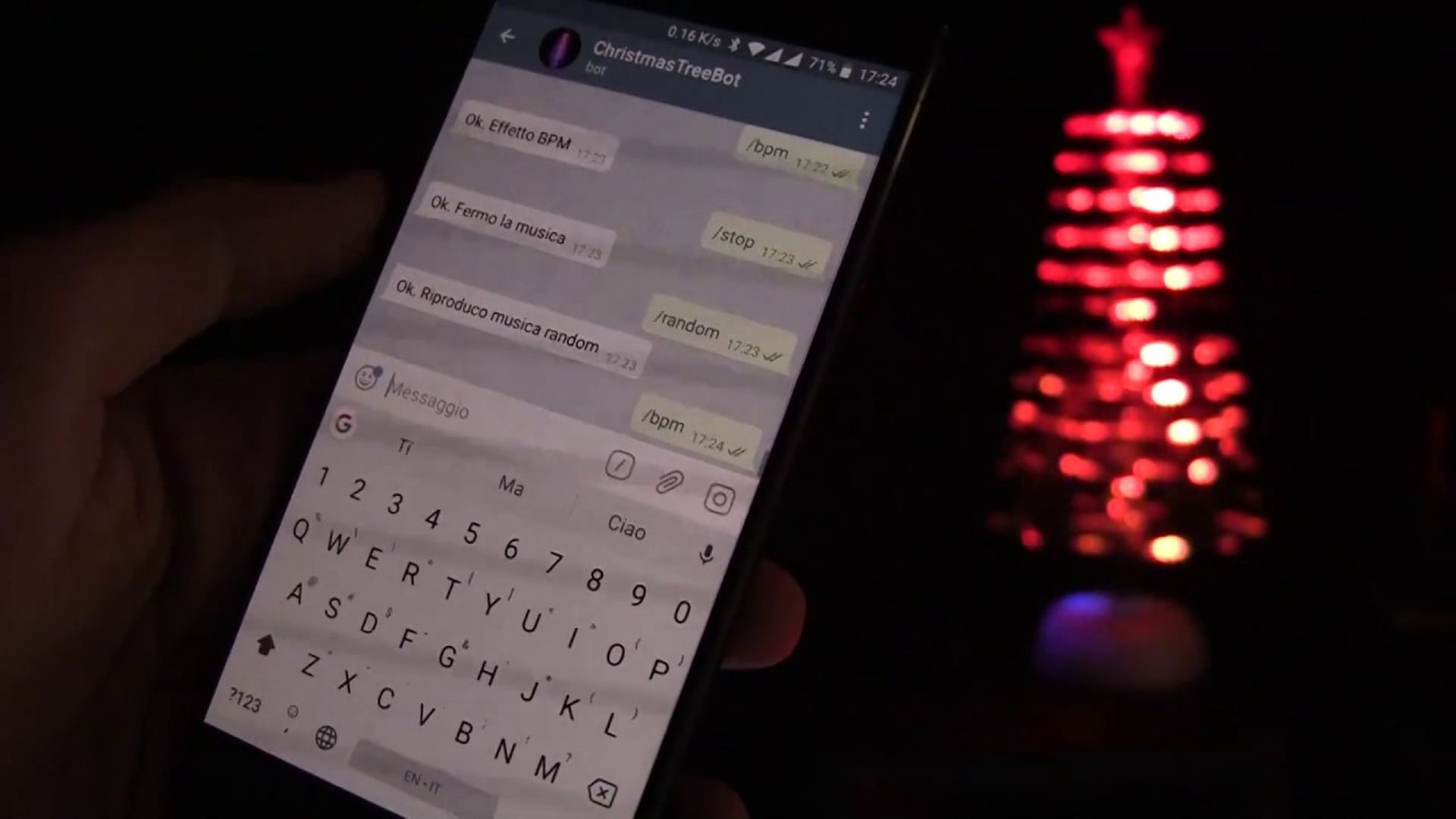

According to the blog post, all of the services either operate via a Telegram bot or provide customer support to users over a Telegram channel. Moreover, the services are appealing to dark web shoppers because they reduce the amount of tedious, manual labor attackers have to perform when executing a phishing campaign, especially one relying on calls and texting. “While there’s some programming ability needed to create the bots, a bot user only needs to spend money to access the bot, obtain a phone number for a target, and then click a few buttons,” the report states.

Indeed, last February, Akamai released a report similarly looking at phishing schemes designed to trick UK bank customers into giving away enough information to bypass their 2FA protections – only the scams were more human-powered and manual. In this more recent case, however, the phishing is incrementally more automated, as “it doesn't seem like there's manual intervention” typically involved in the calls, noted Steve Ragan, Akamai researcher.

Ragan told SC Media he possessed a sample of a phishing kits leveraging a Telegram bot. “In the sample I've got, they're dumping all of the [exfiltrated] credentials to a Telegram channel, which has become a very popular exfiltration method now.”

Indeed, “Telegram has been a popular meeting place for cybercrime actors for a while, particularly because of its ease of use, and because of its supposed… end-to-end encryption,” agreed Greg Otto, Intel 471 researcher, in an interview with SC Media. “It's an easy platform… [for cybercriminals] to get into, write some code and start creating their own business, as illegal as it is.”

Additionally, Telegram provides bot users with their own reclusive forum. “I think Telegram presents the best-case scenario for these bots,” Otto said, “in that they have these closed-off communication circles, but there's also the infrastructure to support these bots… if you need customer service, so to speak, or if you want to talk to other people in the community and [ask] ‘What have you done to tailor your attacks so you can get the most money?’”

Gabi Cirlig, principal threat intel analyst at Human Security, called Telegram's bot API a double-edged sword. “On one side, its flexible and open architecture facilitated constructing wonderful communities built around Telegram bots that do moderation, voting, games and media sharing,” said Cirlig. “However, the same ease of use and flexibility drew in crowds of malware developers as well.”

“We at Human see it used more and more as a covert communication channel for complex malware and involved in huge phishing schemes,” Cirlig continued. “The best thing about it [for cybercriminals] is that its messages are already encrypted, so busting down an operation can be even harder as you don't have to deploy your own layer of obfuscation.”

How Telegram bots work

The bot SMSRanger, for one, requires a simple slash command for malicious users to choose from an array of scripts targeting various businesses and their customers. The scam artist merely provides the target’s phone number and the bot does the rest – using speech/language processing software to ask the call recipient and ask a series of questions as if the bank (or other business) has placed an automated call.

“You can have it where it's PayPal, where it's going after people's Apple accounts, Google Pay accounts,” said Greg Otto, “Also, any bank that you want – because really, you're just feeding it into a natural language processor that announces whatever bank that you want. But also, there's carrier mode which specifically goes after telecom and that is used… [for] SIM swapping.”

A second program, BloodOTPbot, contacts bank customers (the number must be spoofed) and asks them to verify various account information, before sending a fake log-in OTP for the victim to enter – even though it does nothing. Meanwhile, the attacker receives various updates from the bot as the process unfolds. “There are constant notifications in almost real-time that allows an attacker or an actor to know how close they are to getting the information they need to in order to break into whatever account that they're trying to break into,” said Otto.

The Intel471 blog post report said a third bot, SMS Buster, “provides options to disguise a call to make it appear as a legitimate contact from a specific bank while letting the attackers choose to dial from any phone number. From there, an attacker could follow a script to track a victim into providing sensitive details such as an ATM personal identification number (PIN), card verification value (CVV) and OTP, which could then be sent to an individual’s Telegram account.”

Otto said that it’s not necessarily easy for victims to distinguish these malicious automated calls from actual communications, especially if they fit a past pattern of genuine activity. Meanwhile, on the bank side, “there's no fraud or any flagging… because the bank security methods show that all of the security apparatus that they have has been followed,” Otto continued. “You have legitimate bank accounts that are being emptied by actors that were basically given a password to an account and the doors opened and they walked in, signed out a bunch of money and walked away.”

Which leads to the other key takeaway from this report: two-factor authentication aided by OTPs is not a foolproof security mechanism for account management and verification – though it is certainly better than nothing. After all, it still works.

“There's a reason why they're targeting OTPs, and why they're targeting SMS-based… authentication: because [2FA] works,” said Ragan. Authentication takes effort, time and sometimes money to defeat, he explained, and therefore cybercriminals are turning to tools that help them more easily bypass these protections.

At this point, “They're getting really good at trying to filter out security mechanisms,” said Ragan, and so as a counter response, “you’re going to see the amperage go up on security controls in some of the big financial [companies]… And the race begins anew.”

How will financial institutions step up their game further? Instead of relying on call or text-based MFA, Intel471 recommends they apply “more robust forms of 2FA — including Time-Based One Time Password (TOTP) codes from authentication apps, push-notification-based codes, or a FIDO security key.”

“Just give more options to your customers when it comes to multi-factor authentication, whether it's one-time push alerts, hardware like YubiKeys or Titan keys,” said Otto. “Or allow integration with app authenticators...”

In addition, Otto advises banks and other services to be very explicit and upfront with their policies on 2FA and OTPs. For instance, let the customer know if it is not normal procedure to receive phone calls regarding their accounts or OTPs, so that the customer can recognize a suspicious communication.