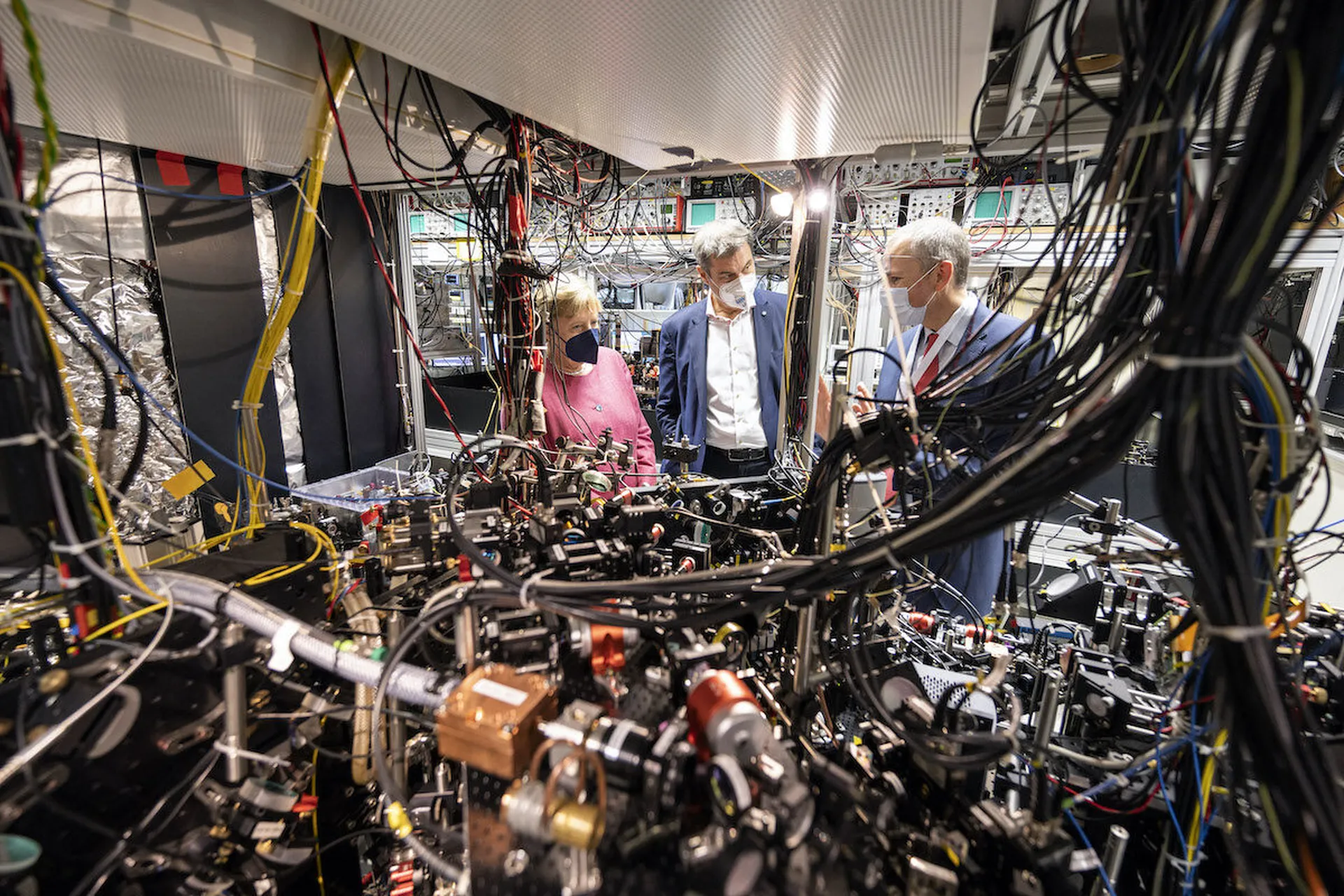

Over the next few months, the National Institute for Standards and Technology will finalize a short list of new encryption algorithms and standards that are designed to withstand the threat of quantum computers, which are expected to one day mature to the point where they are capable of breaking many classical forms of encryption.

This will necessitate a widespread effort over the next decade to transition computers and systems that rely on classical encryption — especially public key encryption — to newer algorithms and protocols that are explicitly designed to withstand quantum-based hacks.

But simply knowing that the world will need to move to new forms of encryption not the same thing as knowing when or how to do it. It also doesn't tell you what to do if and when a new algorithm later turns out not to be a viable candidate.

That kind of uncertainty is why NIST wants multiple algorithms and a number of backup options to be part of any new standards. It’s also one of the reasons it is working to build what is known as “crypto agility” into any forthcoming standards and migration guidance. The term essentially means designing encryption protocols in a way that can facilitate the swift replacement of the underlying algorithm with minimal disruption in the event that it proves not up to the task.

The National Cybersecurity Center of Excellence, a research center within NIST, is working on a migration playbook to help organizations identify vulnerable systems and game out questions around implementation. Officials expect the process of switching out encryption protocols at most large organizations to be a years-long slog, one fraught with uncertainty.

One of the biggest challenges is expected to be visibility — many organizations lack the process and expertise to identify which parts of their IT environment are reliant on the form of encryption that is most at risk from future quantum codebreaking.

“A lot of people don’t have any real sense of where [their public key encryption] are deployed in their systems. The non-technical folks that rely on them probably just don’t really recognize that it's all going to be rather complicated,” said Bill Newhouse, a cybersecurity engineer at NIST during a presentation to the Information Security and Privacy Advisory Board this week.

However, beyond the logistical hurdles, the future of the quantum computing landscape is still murky enough to create substantial pockets of uncertainty that can make it impractical or dangerous for organizations to put all their eggs in one basket.

For instance: until a working quantum computer advanced enough to break classical encryption comes along, officials at NIST are basing their algorithmic choices in part on mathematical estimations of what those computers might do. That means that the algorithms we think will protect us may actually fall short — and in fact NIST official Dustin Moody told SC Media earlier this year that each round of the post-quantum cryptography selection process has revealed a previously unknown or unforeseen weakness in one of the algorithms.

Another variable: a prominent French research organization is claiming that two of the lattice-based algorithms selected as finalists by NIST violate technology patents they own and are demanding the right to receive royalties and issue licenses for their use. That dispute has yet to be resolved, though at least one research paper has argued the claims may have little merit.

Over the next six months, the agency’s cyber center will meet with members of industry and academia to begin building, testing and troubleshooting migrations in a lab setting. They’ll also continue to work with agencies like NSA and the Cybersecurity and Infrastructure Security Agency, which both have defensive cybersecurity missions and in-house expertise on the challenges around encryption.

That work will feed into follow-up awareness campaigns. Newhouse said the agency needs to conduct outreach “to convince people that this is a challenge to be taken on and there are reasonable steps that we can show… because we’ve done it in our laboratory.”

Many hard questions, few clear answers

William Barker, a NIST associate researcher, said there are a bundle of outstanding questions about the effect these new algorithms will have on the broader IT ecosystems they operate in, questions that must be worked out in advance because if the agency doesn’t have the answers, it’s almost certain that other organizations are similarly in the dark.

“How are going to utilize the new algorithms in our protocols, what is this going to do to [Transport Layer Security]? What does hybrid mean? Do we look at doing certificates that have keys for both the legacy algorithm and the new algorithm or is that creating an extra step that… may actually slow down migration?” Barker said as he ran through the list of unknowns that officials are trying to work out.

As it has before, NIST officials are emphasizing that despite the seriousness of the looming threat, quantum computers capable of breaking classical forms of encryption are still widely considered to be years or decades away from reality.

While the agency is still on track to finish picking a handful of new algorithms this year, the accompanying standards will still need to go through a lengthy review and public comment process, and officials said they don’t expect them to be formally in place until at least 2024. While voluntary, NIST guidance is often widely adopted across private industry and quantum encryption is such a specialized subject that their Post Quantum Cryptography project is being closely watched by other standards bodies around the world.

For its part, the agency continues to advise organizations to hold off from buying commercial encryption products that claim to offer protection from quantum codebreaking, saying they are unvetted. Ironically, many vendors in the quantum space also advocate crypto agility and argue that a company and its customers can switch out a bad algorithm if it later turns out to be flawed.

While the threat of data hoarding — collecting and storing encrypted data today in order to crack it years down the line — requires national security agencies and other organizations holding sensitive data to consider their options for replacement algorithms today, there’s still plenty of runway for IT and security teams to approach the coming transition thoughtfully.

“This is no Y2K or a doomsday scenario. All your keys won’t turn to dust,” NIST Computer Security Division Chief Matthew Scholl intones in a recent video the agency made to raise awareness around the coming transition. “It’s no time to panic, it’s time to plan wisely.”