The attack on the U.S. Capitol on January 6 raises many deep ethical questions for companies like us that offer face recognition (FR) systems based on artificial intelligence (AI) to our customers around the world.

Law enforcement agencies in Washington, D.C., and elsewhere use the technology available to them to determine who might have been involved in violent criminal activity, such as the events that occurred at the Capitol earlier this month.

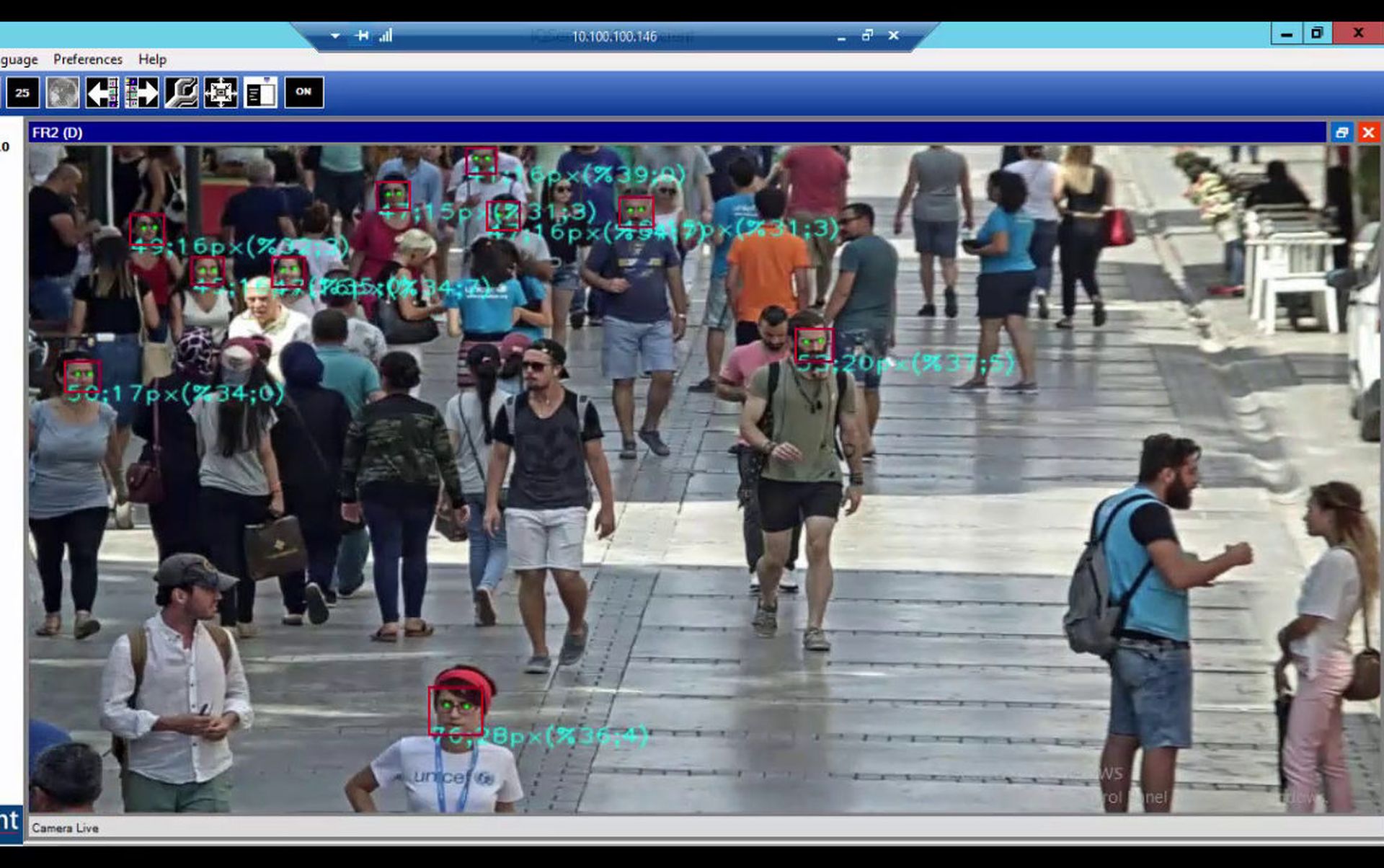

However, they are handicapped by the limitations of the technologies at their disposal. The FR systems generally available to law enforcement today operate using high-resolution video cameras that recognize at close range. For example, if the footage comes from a conventional CCTV camera that has a resolution of one to two megapixels, potential people of interest would have to be within about six to eight feet from the camera to get recognized. However, people are rarely that close to the camera except in access control situations. These FR systems also tend to operate without any privacy controls.

Law enforcement agencies may also use video extracts from social media that usually deliver low-resolution images of persons at a distance and where the camera itself may be moving. Most currently available FR systems are unable to accurately identify people of interest from such images or to track them.

Police agencies really need systems that can perform face recognition on very low-resolution images – where they can recognize people in a crowd in video from one or two megapixel cameras (even when these cameras are moving as on a drone or in social media) at a distance of around 70 feet.

These are available but suppliers of such system have demonstrated a reluctance to offer these more advanced FR technologies to law enforcement agencies in the U.S. because of their concern that the technology could be misused against other citizens.

The ethical issues at hand

There are three core ethical issues that must get addressed for artificial intelligence systems: privacy, bias and transparency of decision making and use.

Privacy protection has become a critical requirement for any FR system. The general public should not have their privacy invaded by intrusive policing systems and we generally have the ability to redact all faces with encryption. The police should have the ability to unredact videos, perhaps authorized by a warrant or other transparent legal process, whereby a digital key gets used to decode the video image.

We can eliminate bias by using FR systems that use pattern matching algorithms rather than deep learning requiring large datasets which can have an inherent bias. In any case, such systems should always involve humans in the final decision making, compensating for any systemic bias.

This brings us to the final pillar – transparency, which we can address with good governance and auditing. The system must have auditable logs of all actions taken by the authorities using the systems, so we can review misconduct.

By and large, tech companies build their technologies from the ground up along ethical frameworks aligned to American and European Union (EU) societal values common to most Western democracies. But tech companies are unable to regulate appropriate use of artificial intelligence. Such decisions are best made by lawmakers, regulators and the judiciary, in partnership with industry and civil society. The decision has been made much easier in the EU, which passed strong privacy regulations and clear rules encapsulated in the GDPR regulations on how such technologies get used.

We need new privacy and appropriate-use legislation globally. Internal controls for organizations that use FR, and auditable records of the machine-human interaction are also required to build a new social contract for the responsible use of AI by government and non-government organizations.

As a society, Americans must establish a consistent position at both state and federal levels on how they will use such technologies to protect them while at the same time ensuring that they are not misused. Until this happens, companies like us proceed cautiously, making our own judgments on how and where to strike the balance on these tough ethical questions.

Rustom Kanga, founder and CEO, iOmniscient